User

Agent

Overview

How does AI chat work?

AI chatbots use a technology called large language models (LLMs). LLMs are software algorithms that were trained on large sets of human written text.

At the core of the technology is the ability to predict the next word in a sentence. Here is an example:

Imagine you asked someone to complete the sentence: “The cow jumped over the _____”

Most people would say “moon.” That’s because most of us have heard the nursery rhyme “hey diddle diddle” about a cow that jumped over the moon.

You know what a LLM would answer? Go try it! Ill wait.

It answered “moon” right?!

This is because the LLM was trained on most of the text on the internet and likely came across the “hey diddle diddle” rhyme often. Probably more often than passages about cows jumping over fences (cows don’t jump fyi).

LLMs use huge clusters of math equations to predict and create the next word in a sentence - all the magic of the LLMs comes down to this.

But all their “knowledge” is hard coded. Once the math equations are set, they are set for good (not exactly true but true enough). This means that once the LLM is “trained” it is done absorbing new information.

Practically speaking this means that LLMs do not have access to external information. All their “knowledge” is contained in their internal algorithms.

So when you ask the LLM for very simple information about current events, its falls flat. In the early days of Chat GPT if you asked it the date it would give you the date it was trained (this has been fixed, skip ahead to learn how).

So that’s it. LLMs only know about information that was included in their training data.

Well not exactly…

How AI agents are different from chatbots?

It turns out that the ability to predict the best next word in a sentence creates an emergent property: the ability to "reason".

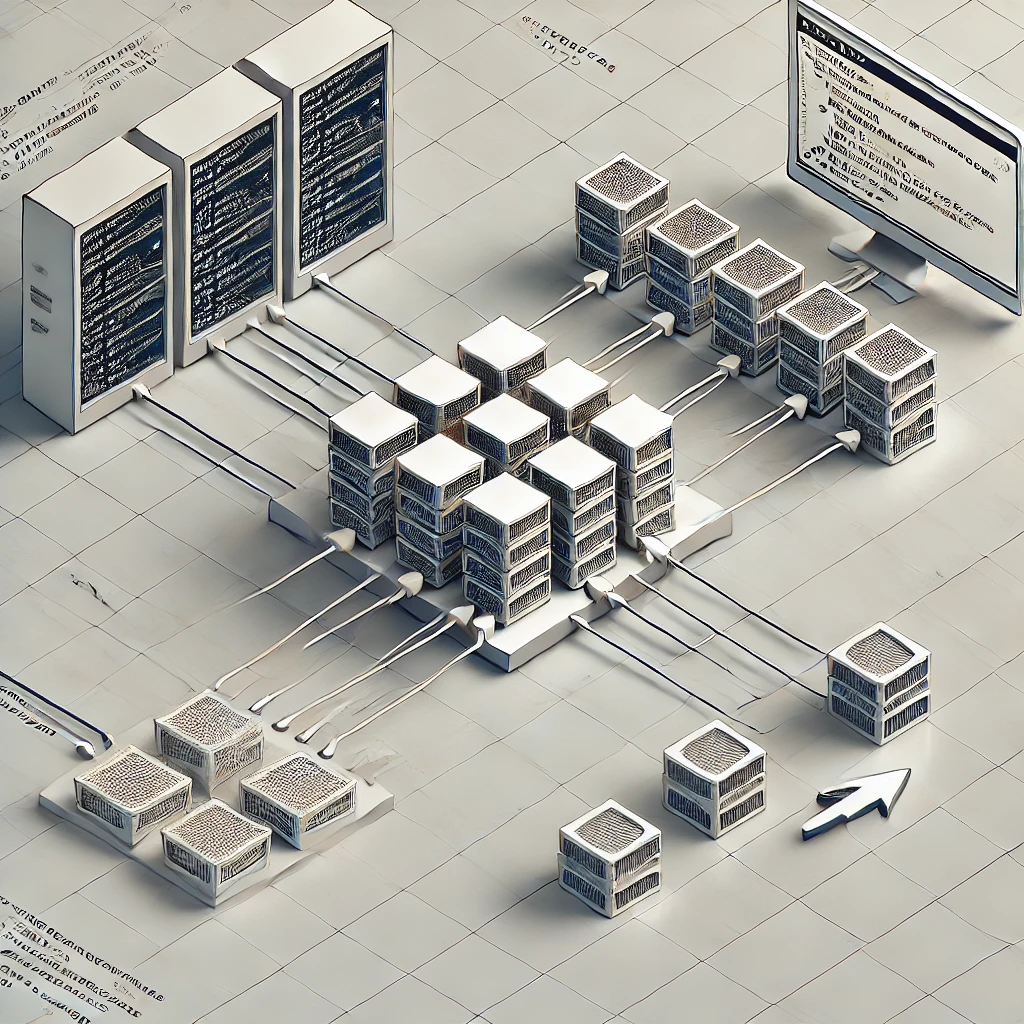

In addition to carrying on a conversation, the AI agent can also pick a tool to use based on the conversation's needs

When given the appropriate tools, and the context of when to call those tools, the current AI models are pretty good at picking the right tool for the job. So the interactions changes...

from this...

To this...

Standard AI chatbots use only their internal knowledge to respond to a message.

AI agents can get information from external tools that can then guide their response.

So just what are these magical tools that AI agents can use? Let's find out...

How does tool calling work?

AI tools, at the most basic level, are anything that can pass in text to the LLM. Its as simple as that. Tools interact with the LLMs the same way humans do - with input text.

Now there are a couple important exceptions we discuss later. For now, lets see how tools work.

When you create an agent you can give it access to tools. You then specify in the context when the agent should call the tool. That’s it! Time to test.

This agent has two tools. A tool to get the highest rated Hacker News post and a tool that can send an email.

Lets break down the response to this message passed to this agent:

“Get the highest rated Hacker News post and send it to my friend John@smalls.com if the post is AI related”

The agent decides to call the tool to get the top Hacker News post.

The agent uses the details of the post to decide if it is AI related.

If the post is AI related, the agent calls the email tool to send an email to John.

This all happens in the seconds after the agent receives the message. The agent is able to call the tool, get the post, and send a email by using the internal "reasoning" of the agent.

Lets examine some types of agent tools:

What are types of AI tools?

There are many types of tools that can be used by AI agents. Here are some of the high level categories:

Retrieval Augmented Generation

Retrieval Augmented Generation - or RAG - is a method for retrieving appropriate context for the agent to use in its response.

For example, an paralegal agent might be able to find the relevant case notes from a database of thousands of cases when ask to summarize a case.

API calls

Most tools use existing APIs to get information. For example, the paralegal agent described above may use an API to get the case notes.

Using existing APIs is a great way to pass data to agents. However there are some considerations to keep in mind. Skip ahead to the Agentic Systems section to learn more.

Data Extraction

It is very common for companies to need to extract data from the chats. Consider a chatbot that gather a medical history from a patient or a sales agent that needs to get a customer's email address.

Data extraction tools are used to pull out the relevant information from the chat. This information can then be used to make decisions or to pass to other tools.

Running code

LLMs are very good at generating code. Several LLMs have built in tools to even run the code they generate.

If you give the agent the ability to run code, it can run the code and check it for bugs. It can then feed the results back to the agent to iteratively improve the code.

Scheduling

Traditional LLMs only work when you interact with them. Agents on the other hand often have a way to schedule runs.

Agents can have access to the scheduling tools. Therefore, you can do things like this:

Agent, get the top news post every morning, summarize it, and send it to me.

This is not an exhaustive list. There are many other types of tools. See the resources section for more information on AI tool types.

What are key considerations for building agentic systems?

You are ready to start building your own AI agent. Here are some key considerations to keep in mind.

Latency

When you use modern AI chatbots like Chat GPT, the latency is very low. This is because most of the time your are conducting question and answer sessions. Once your agent starts using tools your users might experience increased latency.

Consider the example of an agent that calls other agents as tools. This is a common pattern in agentic systems.

The latency the user experiences is the sum of the of all of the tool calling latency. In the case of multiple child agents calls this can add up quickly and make the user experience less than ideal.

A common patterns is to not wait for the child agent responses. The child agents are then called in parallel and parent agent doesn't wait for the responses to continue.

Evaluations

How do you know if your agent is doing a good job? This is where evaluations come in.

Evaluations or "evals" are a way to measure how well your agent is doing. There are many ways to do this. One common approach is to have another agent evaluate the agent.

In this example another "eval" agent looks at the agent's input and output and determines an evaluation score. This score is stored in the database and can be used to improve the agent.

Scheduling

Chatbots only work when you interact with them. AI agents can work in the background when triggered. This is where scheduling comes in.

Consider the example of an agent that coombs social media for relevant information. This agent can be scheduled to run every hour or every day and provide you with the latest information.

You can even give agents the ability to schedule themselves. Image an agent that can post content to social media. You could this agent the ability to create a schedule that watches for responses once a post is made.

Monitoring

Most agentic systems have a way to keep track of what the agents are doing. This is important as an off the rails agent can cause a lot of problems.

Imagine if we gave our social media agent the ability to post content and it decided to post every minute or second! The creators of the agent would want to know this and be able to stop the agent quickly

Just as you put checks in place for systems that humans use, you need to do the same for agentic systems as well.

How can you access the AI agent?

So how do you actually interact with an AI agent? Here are a few ways you or your users can interact with agents:

GUI/Browser

Most of you have used Chat GPT or other AI chatbots in the browser. This is the most common way to interact with agents.

Embedded Chat

You can also embed the agent in your website or app. This is a great way to provide a seamless experience to your users.

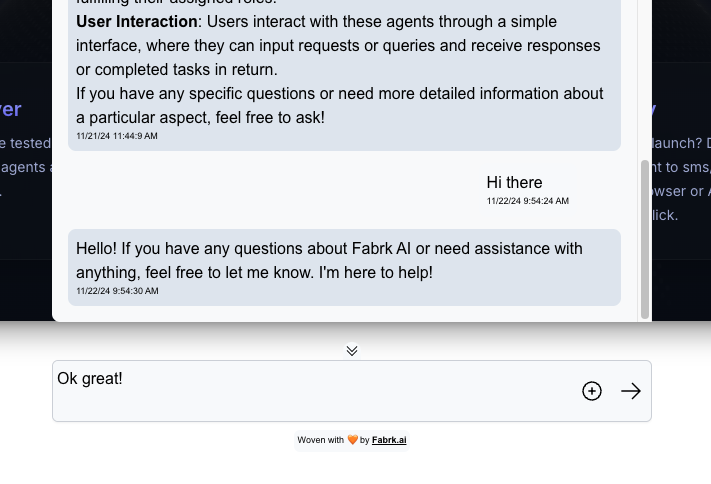

Here is Fabrk AI's embedded chatbot.

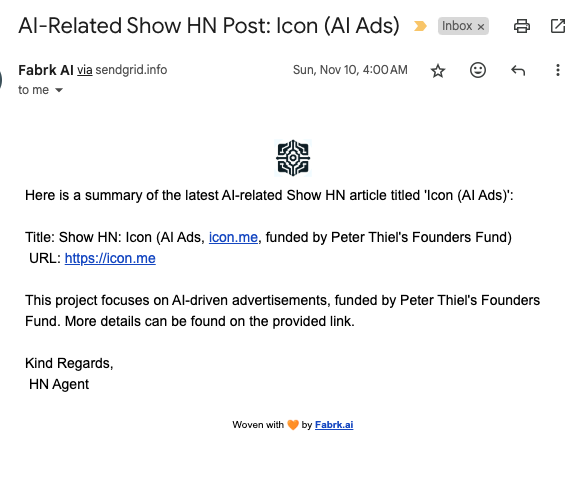

It is helpful to give agents access to read/write emails. This way it can help you manage your inbox or even send notifications/updates.

Here the agent is sending a summary of an article I might be interested in.

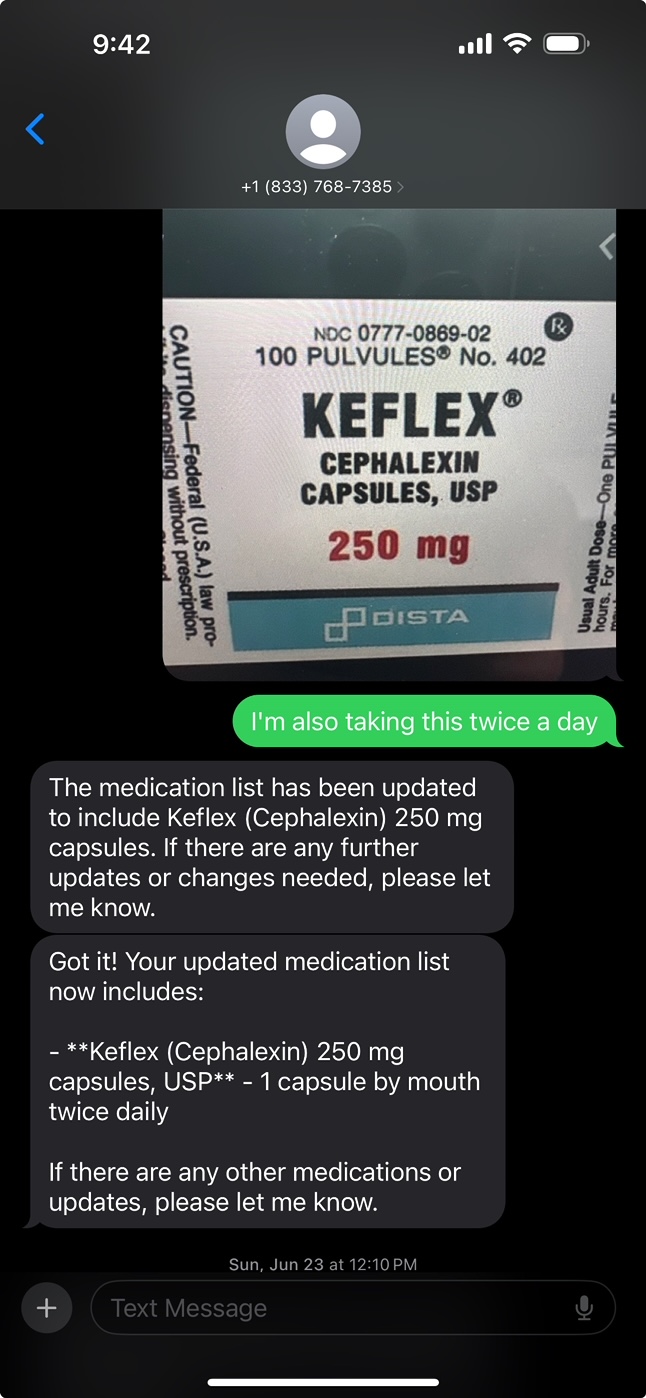

SMS/Text

You can also interact with agents through SMS or text.

This is an example of an agent that is completing a medicine reconciliation for a patient. The patient took a picture of their recent medication and sent it to the agent who added it to their medication list.

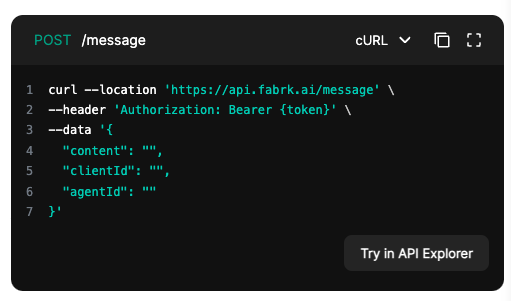

API

Its often the case that you'll want to call agents from your own app/server. This is where the API comes in.

Agent engines often have an API that you can call to run the agent.

On Device

Agents can also be run on device. This is useful for things like voice assistants or other offline applications.

Fabrk AI

Fabrk AI